- AI&TFOW

- Posts

- What No One Says About GPUs [Newsletter #76]

What No One Says About GPUs [Newsletter #76]

The Real Limit is Power

Hello, AI enthusiasts from around the world.

Welcome to this week's newsletter for the AI and the Future of Work podcast.

For years, conversations about AI have focused on one question: which GPU brand delivers the best performance?

What if that focus has kept us from seeing the bigger picture?

Even the most powerful GPUs depend on one thing: a stable and cost-effective energy supply. Without it, scale becomes nearly impossible.

The challenge is clear. Our planet has limited energy, and that constraint shapes AI’s future more than any hardware breakthrough.

This week’s conversation explores why power has become the defining bottleneck, why it matters now, and why the tipping point might be closer than we think.

Let’s dive into this week’s highlights! 🚀

🎙️ New Podcast Episode with Darrick Horton, TensorWave CEO

Everyone is using AI, and we all appreciate when the platforms work smoothly. Every second brings a more capable tool, and the pace keeps accelerating.

That speed creates a problem, but not the one most people expect.

Many assume the biggest challenge ahead is getting enough GPUs. GPUs deliver the parallel processing power needed for models that grow more complex every moment. Access to them matters, and customers depend on it, as Darrick Horton explains.

In his view, end users rarely think about what sits behind an AI application. They want reliability, ease of use, low costs, and accessibility. Reaching that standard comes with one major obstacle: power. Data centers everywhere are struggling to secure enough of it to keep up with the growing demand for AI workloads.

Darrick is the CEO and cofounder of TensorWave, created to democratize access to advanced AI computing. As part of this mission, TensorWave has deployed a training cluster with 8,000 GPUs designed for large-scale AI workloads.

Infrastructure challenges define much of Darrick’s career. He previously cofounded VMAccel, sold Lets Rolo to LifeKey, and cofounded crypto mining company VaultMiner.

He sat down with PeopleReign CEO Dan Turchin to share why AI’s biggest barrier isn’t hardware. It’s power. To explain the issue, he asks a single question.

Where will we get the power to feed those GPUs?

The entire US is power constrained. The entire world is power constrained.

Darrick warns that, at the current pace, demand for AI will grow far faster than our available power supply. Addressing this requires a long-term vision that few are pursuing, yet a path forward exists.

This week's conversation covers this and much more:

How Darrick and his team made a bold bet that went against the broader AI industry by choosing AMD instead of NVIDIA.

TensorWave’s philosophy is to build open ecosystems because they’re the only path to real democratization of access.

Neoclouds are rising as traditional clouds struggle to match the pace of AI’s evolution.

Darrick acknowledges that brand loyalty has advantages and drawbacks, but supporting multiple chips often dilutes performance.

Power demand is a major challenge, but Darrick highlights another one that receives less attention, network reliability.

🎧 This week's episode of AI and the Future of Work, featuring Darrick Horton, CEO of TensorWave

📖 AI Fun Fact Article

One of the biggest barriers to widespread AI deployment is access to power. The World Economic Forum projects that data center energy use will double between 2024 and 2027 due to the energy demands of AI workloads.

Energy is limited and we are stretching every remaining resource. So the question becomes, how do we make AI more efficient?

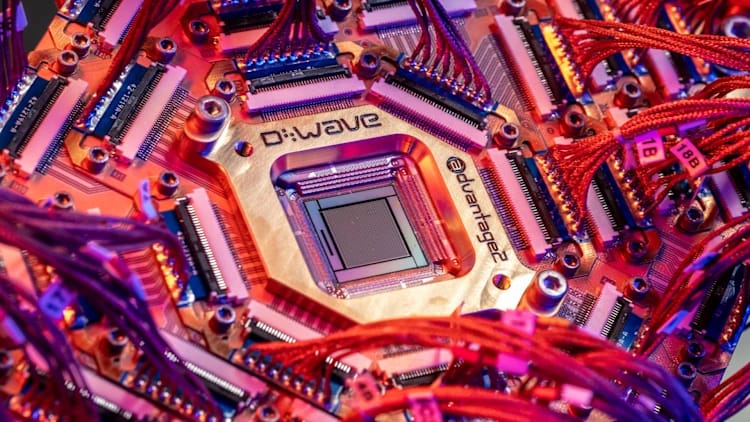

Alan Baratz suggests an answer in Fast Company, and it points to quantum computing.

Traditional computing evaluates one possibility at a time, which worked for decades. AI now analyzes vast sets of possibilities, and its computing needs reflect that shift.

Quantum computing tackles these complex landscapes more efficiently. Problems that once required significant time or cost could become far easier to solve.

Source: DWAVE Quantum

PeopleReign CEO Dan Turchin believes quantum computing is a technology we must explore now. It is also a reminder of why investing in basic science research is so critical.

Even when commercial applications may be a decade or more away, it is likely that achieving AI breakthroughs in areas like drug discovery, increasing crop yields, curing cancer, and reducing the impact of climate change will depend on whatever comes after large language models.

Quantum computing could accelerate those breakthroughs in the same way nuclear energy did.

While quantum computing often feels like it is always a decade away from broad use, the experimentation required to understand its potential will spark new questions and new technologies that are otherwise impossible to imagine.

Breakthroughs that enabled human evolution (from fire to the wheel to the semiconductor) all came from long periods of innovation brought followed by bursts of rapid progress. Basic science is a necessity here, and a worthy use of public funds. It's the only path to ensuring humanity continues to evolve and benefit from innovative new technologies.

Listener Spotlight

Ralph teaches college physics in Phoenix.

His favorite episode is #271 with Dr. John Boudreau, a pioneer in the future of work and former Cornell professor who explains the new definition of work.

🎧 You can listen to that excellent episode here!

As always, we love hearing from you. Want to be featured in an upcoming episode or newsletter? Comment and share how you listen and which episode has stayed with you the most.

We'd love to hear from you. Your feedback helps us improve and ensures we continue bringing valuable insights to our podcast community. 👇

📃 Worth a Watch

There might now be more AI-generated content than human-created content circulating across the virtual universe. As surprising as that sounds, it aligns with the speed at which AI continues to evolve.

This trend is often called AI slop, and understanding its implications is more complicated than it appears. One research paper shows that, despite the growth of AI slop, it plateaued at the end of 2024.

Source: graphite.io

Most of this content never appears on Google or ChatGPT.

Why does this happen?

Researcher Michael Pound from the University of Nottingham explains why this unusual situation matters and what we can do about it.

Check out his interview here.

Until Next Time: Stay Curious🤔

We want to keep you informed about the latest developments in AI. Here are a few stories from around the world worth reading:

Investment in AI technology has crossed the one trillion dollar mark, yet Google’s CEO warns that this trend is not entirely positive.

AI is helping hospitals expand access to prevention programs without lowering the quality of care. Here’s how.

Insurance companies are working to make AI systems safer to reduce the risk of large payouts. Here’s more.

👋 That's a Wrap for This Week!

This week’s conversation highlights one challenge that affects every part of the AI ecosystem: getting enough power. The path forward is not simple, and there is no single answer. Still, progress is possible when we align around shared goals.

That is why democratizing access to AI matters. We hope this conversation encourages you to look for practical ways to support that mission. 🎙️✨

If you liked this newsletter, share it with your friends!

If this email was forwarded to you, subscribe to our Linkedin’s newsletter here to get the newsletter every week.