- AI&TFOW

- Posts

- Unpacking Predictions for 2026 [Newsletter #85]

Unpacking Predictions for 2026 [Newsletter #85]

Dave Kellogg on SaaS, AI, and Trust

Hello, AI enthusiasts from around the world.

Welcome to this week’s newsletter for the AI and the Future of Work podcast.

Some people react to change. Others are good at foreseeing what is to come. Dave Kellogg belongs to the second group. For decades, he has been known for making predictions about enterprise software that are uncomfortable, easy to dismiss at first, and harder to ignore over time.

In this week’s episode, Dave returns to unpack his predictions for 2026, and to revisit a few calls from 2025 that now look less provocative and more explanatory. The conversation spans SaaS, AI, trust, and a growing inversion at the center of modern work.

The question running through it all is simple, but unsettling: Are our systems still working for us, or are we increasingly working for them?

Let’s dive into this week’s highlights! 🚀

🎙️ New podcast episode with Dave Kellogg, author at Kellblog

Making predictions is a risky proposition. Get one wrong and people are quick to point it out. Get one right and the reaction is quieter, especially when the prediction surfaces things most prefer not to confront.

That is the role Dave Kellogg has taken on. Each year, his predictions function less as forecasts and more as a mirror held up to the tech industry. Not a flattering one. A revealing one.

One of his most debated calls at the start of 2025 was the “death of SaaS.” A year later, Dave labels it a partial hit. Not because SaaS is thriving, but because it is often misunderstood. AI can generate interfaces quickly. It cannot replace decades of operational edge cases, compliance requirements, scale, and maintenance.

That gap explains why large SaaS platforms endure while weekend-built alternatives rarely do. Enterprises do not buy software for novelty. They buy it to reduce risk.

Dave brings this perspective from decades spent inside enterprise software. He is a serial CMO and CEO, an investor, an Executive-in-Residence at Balderton Capital, and the author of Kellblog. His work advising and leading SaaS companies from early stages through scale is why he is often called the SaaS Whisperer.

In this episode, Dan Turchin, CEO of PeopleReign, sits down with Dave to unpack his predictions for 2026. The theme running through them is subtle and unsettling: we are increasingly working for algorithms.

From CAPTCHAs to SEO to productivity tracking, this shift shows up in everyday systems. Behavior is shaped by what algorithms reward, turning automation into something we manage rather than something that simply serves us.

This conversation covers this and much more:

How everyday behaviors, from SEO to productivity tracking, show what it means to work for algorithms, and how that dynamic inverts the original promise of AI serving humans.

Why AI will change jobs, but not in the way most people expect.

How AI has made it cheap to produce content at scale, turning trust into a scarce asset.

Why shifting market conditions are forcing new investment rules. The Rule of 40 is giving way to the Rule of 60, where companies are expected to grow while generating real cash.

How the largest venture capital funds are evolving toward financial services models, driven more by fee economics than by long-term operating outcomes.

🎧 This week’s episode of AI and the Future of Work features Dave Kellogg, venture capitalist and author.

Listen to the full episode to hear why Dave’s optimism remains intact, but conditional. AI will not doom us; it forces us to use clarity and trust.

📖 AI Fun Fact

Is rapid adoption the same as trust?

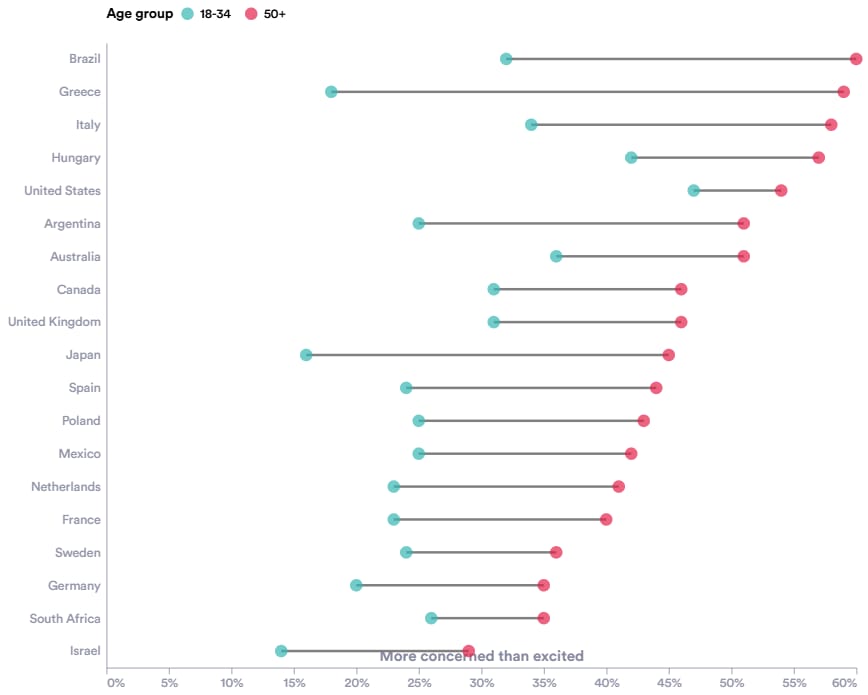

AI adoption is accelerating, and it is often assumed that trust in AI systems is rising alongside it. But esearch suggests a more complicated picture. Writing in the Economic Observatory, Finn McEvoy notes that despite widespread use of AI tools, there is still limited understanding of how much the public actually trusts these systems.

Survey data shows that people tend to feel both anxious and excited about AI at the same time, and this ambivalence is not limited to any one region. In the United States, concern tends to outweigh excitement, driven largely by fears about job displacement and privacy. In South Korea, by contrast, most respondents report feeling equally concerned and excited about AI.

McEvoy also points to a consistent divide between richer and poorer countries. Higher-income countries are generally more pessimistic about AI’s impact, while lower-income countries are more welcoming, often viewing AI as a way to accelerate development in areas such as infrastructure and public services.

What emerges is not a simple story about adoption or rejection, but about trust—and how unevenly it is formed. As Dave Kellogg discusses in this week’s episode, AI is flooding the world with output at unprecedented speed, which makes trust harder to earn, not easier. In that context, rising usage doesn’t automatically signal confidence. It often signals necessity.

You can learn more about this survey here.

📽️ Worth The Watch

Early fears about AI and software development were not just speculative. A 2025 study cited by Dig Watch documented how younger software developers were disproportionately affected as AI tools took over routine tasks. The article shows how automation pressures landed first on less experienced engineers, raising early concerns about where displacement would show up first.

Fast-forward one year, and the story becomes more complicated. As Business Insider reports, many experts now argue that AI is unlikely to eliminate coding jobs altogether. Instead, they describe a shift in how development work is structured, with AI assisting engineers rather than fully replacing them.

Source: IT Pro

What stands out is that some of the companies that moved fastest to automate development are now reassessing those decisions. This video from Economy Media explores how early bets on AI-led development often overestimated what current tools could deliver in real production environments, especially in complex systems where judgment, context, and accountability still matter.

That pattern echoes a broader theme from this week’s conversation with Dave Kellogg. As he notes, AI expands output and lowers the cost of creation, but it also raises the bar for trust, quality, and judgment. In software development, that means the work is changing faster than the roles are disappearing. You can see how that recalibration is playing out by watching the video above.

Listener Spotlight

Marianne in Sebastopol listens to the podcast while painting. Her favorite episode features Professor Meredith Broussard, author of More Than a Glitch, on algorithmic accountability. It’s one of our favorites as well. We’re glad it resonated with you.

🎧 You can listen to that excellent episode here!

As always, we love hearing from you. Want to be featured in an upcoming episode or newsletter? Comment and share how you listen and which episode has stayed with you the most.

We'd love to hear from you. Your feedback helps us improve and ensures we continue bringing valuable insights to our podcast community. 👇

Until Next Time: Stay Curious 🤔

We want to keep you informed about how AI is reshaping work, education, and society. Here are a few stories from around the world worth reading:

What if there were a teacher-less school? A chain of private schools is experimenting with AI-driven education models for the next generation of students. Here’s more.

Concern about AI and jobs is widespread, but the impact may not be evenly distributed. This article explains why women are likely to be more affected by these shifts than men.

Anxiety about AI is not new. This piece argues for shifting the focus from fear to preparation.

If You’re Using AI at Work, This Is for You

As we explore how AI is changing work, we’ve heard from many guests that responsibility is no longer optional.

We recently launched the AI @ Work – Level One Leaders certification to help turn those ideas into action. It covers the fundamentals of responsible, human-first AI use, without hype or jargon.

Think of it as a practical guide for leaders who want to use AI wisely, not recklessly.

It’s free, practical, and designed for people using AI at work.

Register here to get all the details on how to access it.

👋 That's a Wrap for This Week!

Each year, our lives become more intertwined with AI. Its evolution is fast, and outcomes don’t always match early expectations.

Some predictions will need revision. Others will miss entirely. Across both, and one constant remains: Trust continues to matter.

This week’s conversation invites a more grounded question. How do you use AI to strengthen your work without outsourcing judgment? And how do you earn trust in a world where output is cheap, but credibility is not?

We hope it encourages you to explore how AI can support your work, while keeping human values firmly in place.

If you liked this newsletter, share it with your friends!

If this email was forwarded to you, subscribe to our Linkedin’s newsletter here to get the newsletter every week.